Google Trends API alternative: Wikipedia Pageview Statistics

Unofficial Python API PyTrends stopped working? Here's an alternative approach that might work for you

I'm a huge fan of Google Trends and always curious about its predictive power. From daily to yearly trends, to finding similar topics or regional patterns, the applications for research on search trends are endless. For example, it was used for forecasting stock market prices (Paper by Huang et al., 2019)

Google Trends Python package

Although Alphabet does not provide an official API besides the website trends.google.com, an unofficial implementation for Python was released in 2016. The PyPI package 'pytrends' has over 200,000 monthly downloads and has been providing great service to researchers around the world.

Is pytrends not working in 2024? (Or the problem of unofficial api usage)

While the API is simple to use and provides the functionality of the UI, it is unreliable from time to time. I recently tried to find related queries to the election, and when running the code, it gave me the following error:

>> pytrends.related_queries()

Traceback (most recent call last):

File ".../pytrends/request.py", line 450, in related_queries

req_json['default']['rankedList'][0]['rankedKeyword'])

IndexError: list index out of range

An error occurred while fetching related queries: The request failed: Google returned a response with code 429Being in the flow state of programming, I frantically started looking for alternatives to understand human search and topic trends. Immediately, Wikipedia and its approach to public datasets stood out. I was able to replace my analysis using Google Trends with public data from Wikipedia.

Wikipedia page views

Unknownlignly to most people Wikipedia has a rich API and tools to work with the encyclopedia. You try to compare daily article views for cats and dogs here for example. One of their treasures is the public page views dataset, available since May 2015, providing filtered view counts for Wikimedia Foundation projects and articles.

We can leverage this data to perform a similar request to what we previously did with pytrends. But this time it’s all official ✅

Python code to fetch the last month page view statistics for a Wikipedia article by title:

In the following code we will use the public endpoint to fetch historic daily views for a given timeframe.

import requests

from datetime import datetime

import pandas as pd

# Function to get the page views over the last month

def get_page_views(title):

end_date = datetime.datetime.now().strftime('%Y%m%d')

start_date = (datetime.datetime.now() - datetime.timedelta(days=30)).strftime('%Y%m%d')

url = f"https://wikimedia.org/api/rest_v1/metrics/pageviews/per-article/en.wikipedia/all-access/all-agents/{title}/daily/{start_date}/{end_date}"

res = requests.get(url, headers={

"User-Agent": "CUSTOM USERAGENT TO IDENTIFY YOUR API CALL"

})

if res.is_ok:

d0 = pd.DataFrame(res.json()['items'])

d0['timestamp'] = pd.to_datetime(d0['timestamp'],

format='%Y%m%d%H')

return d0

else:

print(f"Failed to retrieve data for {title}")

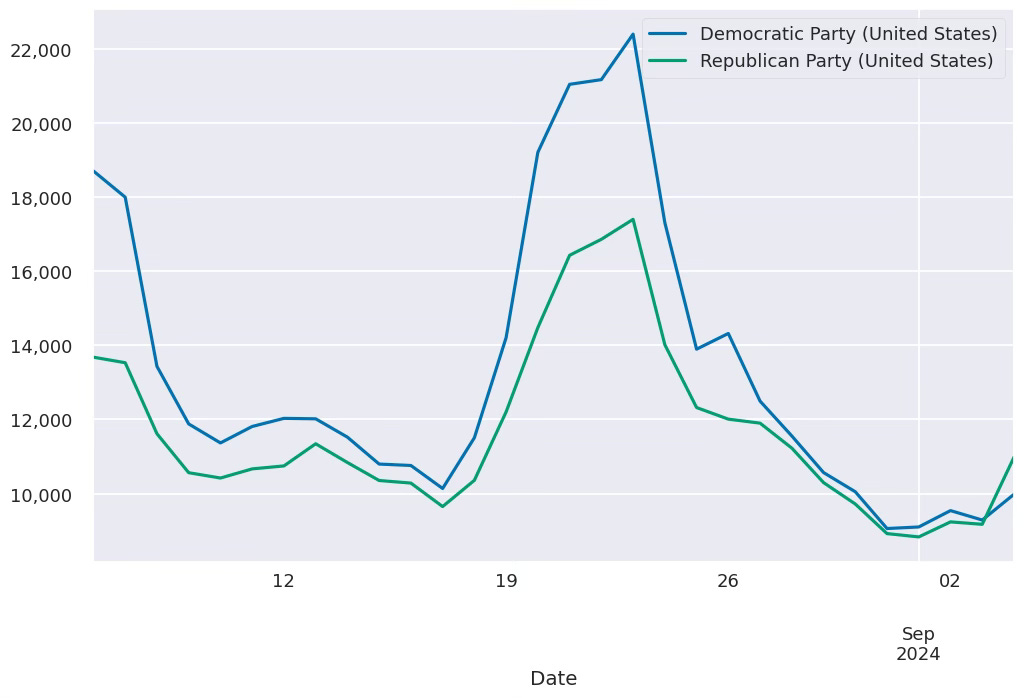

return NoneNow this gave me exactly the data I was looking for. Here you can see what happens, when the views of two political parties are compared

Tip: How to find the title of a Wikipedia article via the Python search api?

Sometimes it’s tricky to find the matching article, here a simple snippet to search wikipedia for a keyword and return the first title of the found article

# install wikipedia-api with pip install wikipedia-api

import wikipediaapi

wiki_wiki = wikipediaapi.Wikipedia('YOUR USER AGENT',"en")

# Returns the first search result for the query as a string

def search_term(query):

params = {

"action": "query",

"list": "search",

"srsearch": query,

"srlimit": 1,

}

data = wiki_wiki._query(wiki_wiki.page(""), params)

titles = [result["title"].replace(" ", "_") for result in data["query"]["search"]]

return next(iter(titles))For example using search_term("Python") will return Python_(programming_language). We can now combine this as well:

df = get_page_views(search_term("Python"))

This will return the data for the first result for Python.

🎉🎉🎉

Now we can move on from Google Trends. But wait what about more granular data?

For some cases it might be important to get the hourly views, in the next section you can find the code to get hourly views. It requires some more pre-processing, but see for yourself.

How to get hourly page view statistics for Wikipedia in Python?

What's the best way to retrieve hourly page view statistics for Wikipedia articles using Python? I'd like to use the official Wikimedia API if possible. Find out how to use perform hourly view statistics calculations for your research.

# install polars with `pip install polars`

import polars as pl

from datetime import datetime, timedelta

# set your start date and how many hours you want to fetch

start_date = datetime(2024, 9, 1, hour=0, minute=0, second=0)

i = 0

max_hours = 24 * 30

all_views = []

for i in range(0, max_hours):

# update the date to fetch the next hour

date = start_date + timedelta(hours=i)

formatted = (date).strftime("%Y/%Y-%m/pageviews-%Y%m%d-%H0000")

wiki_views = pl.read_csv(

f"https://dumps.wikimedia.org/other/pageviews/{formatted}.gz",

separator=" ",

has_header=False,

new_columns=["lang", "article", "views", "_"],

).filter(pl.col("lang") == "en", pl.col("views") > 4)

print("fetched date", date)

all_views.append(

wiki_views.drop(["_"]).with_columns(pl.lit(date).alias("date"))

)

df = pl.concat_df(all_views)

# Define a search word:

title = "Python_(programming_language)"

df.filter(

pl.col("article").str.starts_with(title)

).sort("date")

To understand the data, we can look up the documentation on Wikipedia:

The public pageviews dataset has per-article and per-project view counts for all Wikimedia Foundation projects since May 2015.

This concludes our coding adventure replacing Google Trends with Wikipedia views for daily or hourly views. You can find the gist on my GitHub (@franz101).

I don’t understand a word but I‘m still proud of my bubuuuu